Những Chi Phí Âm Thầm Khi Ưu Tiên Lợi Ích Thương Mại Hơn An Toàn Trí Tuệ Nhân Tạo

Chương trình an toàn trí tuệ nhân tạo phân tán tận dụng các cuộc thi do cộng đồng tổ chức để đảm bảo hệ thống trí tuệ nhân tạo mạnh mẽ và an toàn.

Với trí tuệ nhân tạo tiếp tục tích hợp vào cấu trúc xã hội, nhu cầu phát triển hệ thống nhanh hơn và hiệu quả hơn thường làm mờ ánh sáng cần thiết bằng mức độ cao cũng tức thì đối với an toàn trí tuệ nhân tạo. Với thị trường trí tuệ nhân tạo dự kiến đạt 407 tỷ đô la vào năm 2027 và tỷ lệ tăng trưởng hàng năm dự kiến là 37,3% từ năm 2023 đến 2030, việc ưu tiên lợi ích thương mại đặt ra đồng loạt lo ngại về an toàn và đạo đức của việc phát triển trí tuệ nhân tạo.

Erosion of public trust

The public’s trust in AI technology is eroding because of the AI industry’s relentless focus on speed and efficiency. There is a significant disconnect between the industry’s ambitions and the public’s concerns about the risks associated with AI systems.

As AI becomes more ingrained in daily life, it’s crucial to be clear about how these systems work and the risks they may pose. Without transparency, public trust will continue to erode, hindering AI’s wide acceptance and safe integration into society.

Lack of transparency and accountability

The commercial drive to rapidly develop and deploy AI often leads to a lack of transparency regarding these systems’ inner workings and potential risks. The lack of transparency makes it hard to hold AI developers accountable and to deal with the problems AI can cause. Clear practices and accountability are important to build public trust and ensure AI is developed responsibly.

Spread of harmful biases and discrimination

AI systems are often trained on data that reflect societal biases, which can lead to discrimination against marginalized groups. When these biased systems are used, they produce unfair outcomes that negatively impact specific communities. Without proper oversight and corrective measures, these issues will get worse, underscoring the importance of focusing on ethical AI development and safety measures.

Concentration of power and wealth

Beyond biases and discrimination, the broader implications of rapid AI development are equally concerning. The rapid, unchecked development of AI tools risks concentrating immense power and wealth in the hands of a few corporations and individuals. This concentration undermines democratic principles and can lead to an imbalance of power. The few who control these powerful AI systems can shape societal outcomes in ways that may not align with the broader public interest.

Existential risks from unaligned AI systems

Perhaps the most alarming consequence of prioritizing speed over safety is the potential development of “rogue AI” systems. Rogue AI refers to artificial intelligence that operates in ways not intended or desired by its creators, often making decisions that are harmful or contrary to human interests.

Without adequate safety precautions, these systems could pose existential threats to humanity. The pursuit of AI capabilities without robust safety measures is a gamble with potentially catastrophic outcomes.

Addressing AI safety concerns with decentralized reviews

Internal security and safety measures have the risk of conflict of interest, as teams might prioritize corporate and investor interests over the public. Relying on centralized or internal auditors can compromise privacy and data security for commercial gain.

Decentralized reviews offer a potential solution to these concerns. A decentralized review is a process where the evaluation and oversight of AI systems are distributed across a diverse community rather than being confined to a single organization.

By encouraging global participation, these reviews leverage collective knowledge and expertise, ensuring more robust and thorough evaluations of AI systems. This approach mirrors the evolution of security practices in the crypto world, where audit competitions and crowd-sourced reviews have significantly enhanced the security of smart contracts — self-executing digital agreements.

AI safety in the crypto world

The intersection of AI and blockchain technology presents unique security challenges. As AI emerges as a growing sub-vertical within the crypto industry, projected to be worth over $2.7 billion by 2031, there is a pressing need for comprehensive AI and smart contract safety protocols.

In response to these challenges, Hats Finance, a decentralized smart bug bounty and audit competitions marketplace, is rolling out a decentralized AI safety program designed to democratize the process of AI safety reviews. By democratizing AI safety through community-driven competitions, Hats Finance aims to harness global expertise to ensure AI systems are resilient and secure.

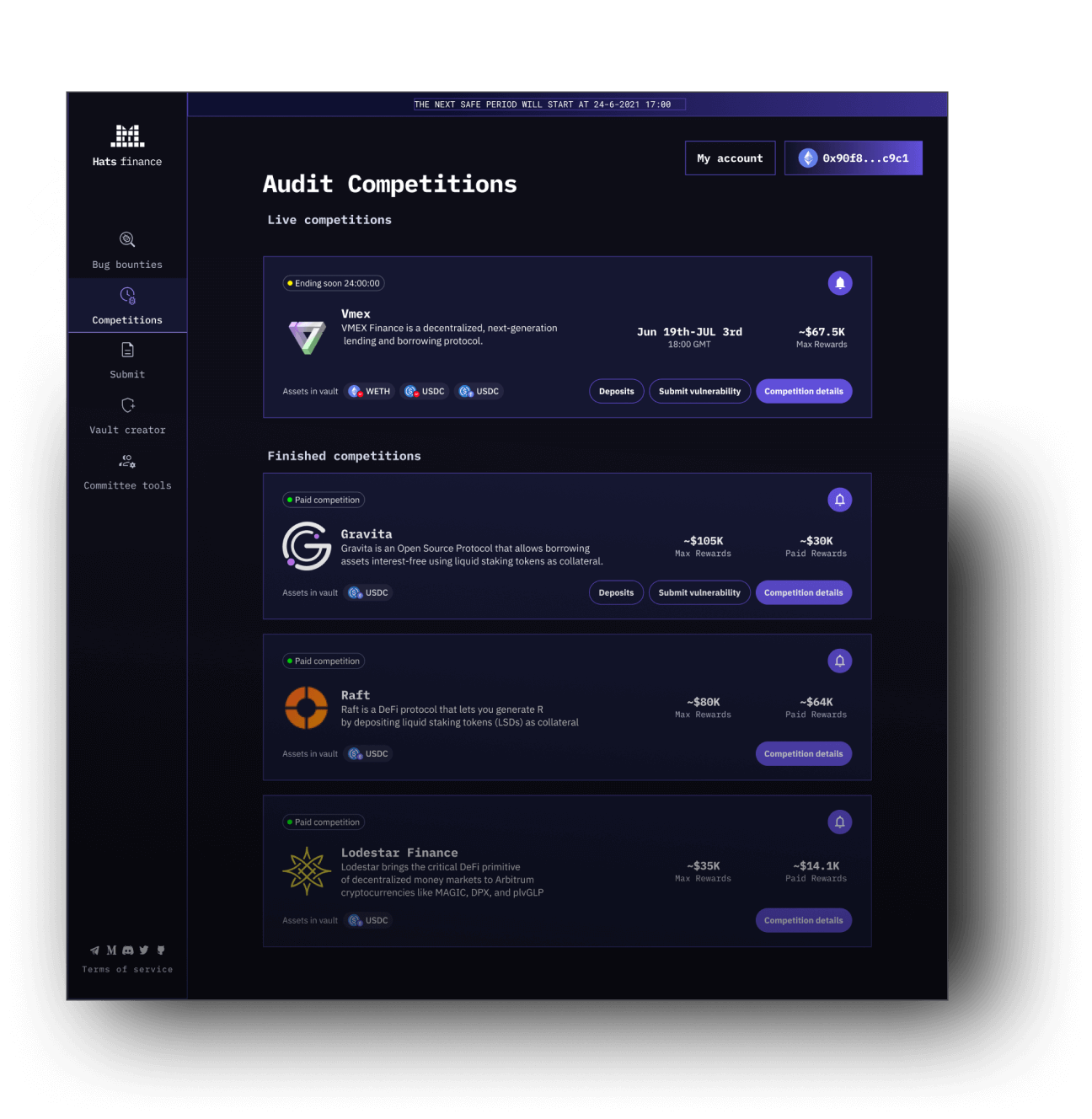

Web3 security researchers can participate in audit competitions for rewards. Source: Hats Finance

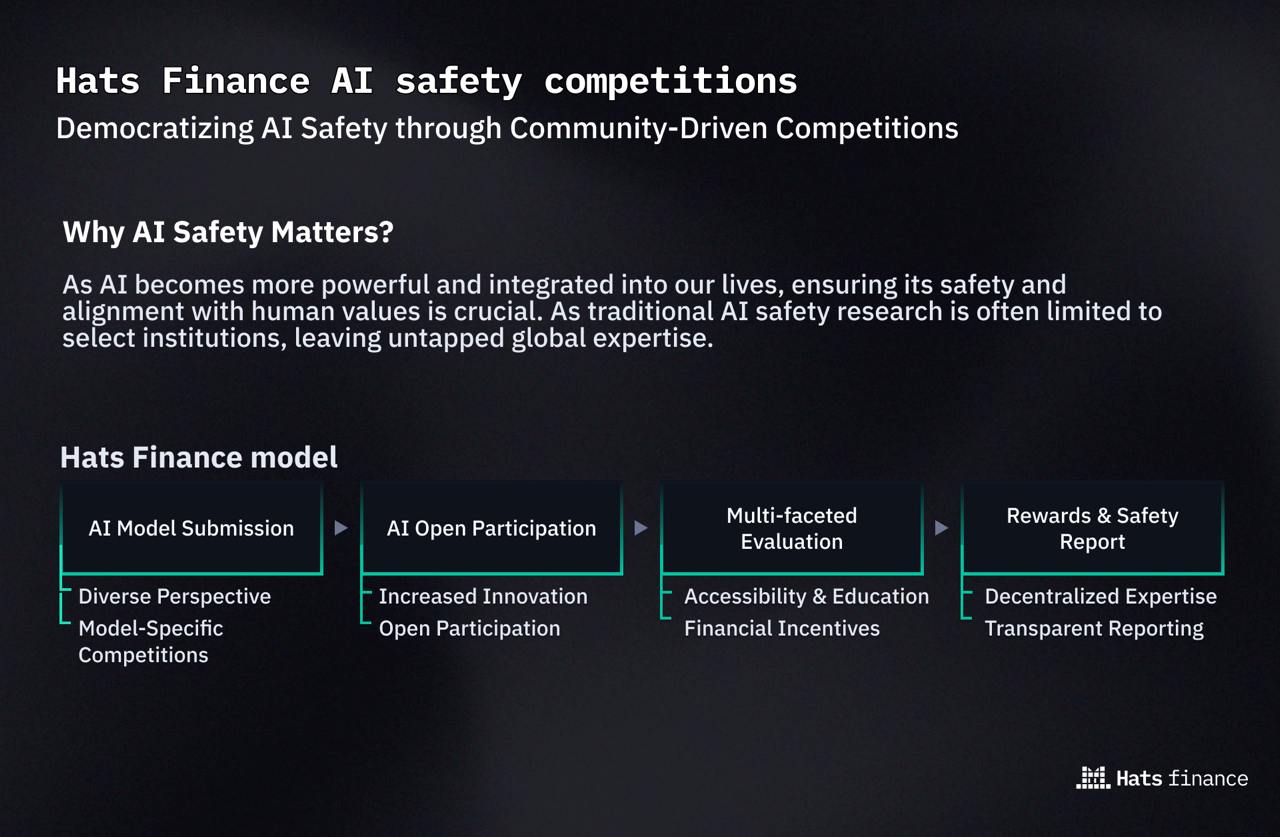

Traditional AI safety research has often been confined to select institutions, leaving a wealth of global expertise untapped. Hats Finance proposes a model where AI safety is not the responsibility of a few but a collective endeavor.

How decentralized AI review works

The first step in the Hats Finance process is developers submitting AI models. These developers, ranging from independent researchers to large organizations, provide their AI models for evaluation. By making these models available for review, developers are taking a crucial step toward transparency and accountability.

Once the AI models are submitted, they enter the open participation phase. In this stage, a diverse community of experts from around the world is invited to participate in the review process. The global nature of this community ensures that the review process benefits from a wide range of perspectives and expertise.

Next, the AI models undergo multifaceted evaluations where each model is rigorously assessed by a diverse group of experts. By incorporating various viewpoints and expertise, the evaluation process provides an analysis of the model’s strengths and weaknesses and identifies potential issues and areas for improvement.

After the thorough evaluation, participants who contributed to the review process are rewarded. These rewards serve as incentives for experts to engage in the review process and contribute their valuable insights.

Finally, a comprehensive safety report is generated for each AI model. This report details the findings of the evaluation, highlighting any identified issues and providing recommendations for improvement. Developers can use this report to refine their AI models, addressing any highlighted concerns and enhancing their overall safety and reliability.

Source: Hats Finance

The Hats Finance model democratizes the process and incentivizes participation, ensuring AI models are scrutinized by a diverse pool of experts.Embracing the DAO structure for enhanced transparency

Hats Finance is transitioning to a decentralized autonomous organization (DAO) to further align with its goals. A DAO is a system where decisions are made collectively by members, ensuring transparency and shared governance. This shift, set to occur after the public liquidity bootstrapping pool sale and the token generation event of Hats Finance’s native token, HAT, aims to sustain the ecosystem of security researchers and attract global talent for AI safety reviews.

Nguồn: Cointelegraph